Subsurface Scattering

by Juchan Kim & Kai-Sern Lim

Abstract

We attempted to extend the raytracer project and implement subsurface scattering in order to render translucent materials. Translucent materials do not reflect light in all directions when light hits it, some of the light goes within the material and scatters within, being emitted at other nearby locations. The Jensen paper proposes a combination of dipole model (modeling diffusion) and a single scattering model.

Technical Approach

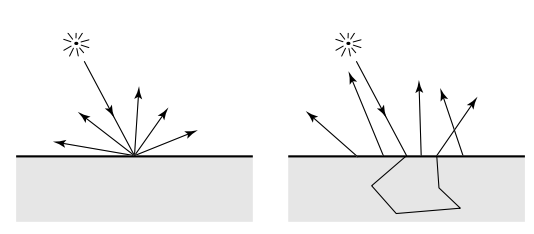

Dipole Model

From the original (Jensen 2001) paper: the irradiance value is

. The complete BSSRDF model is approximated by a sum of two terms, a diffusion term and a single-scattering term:

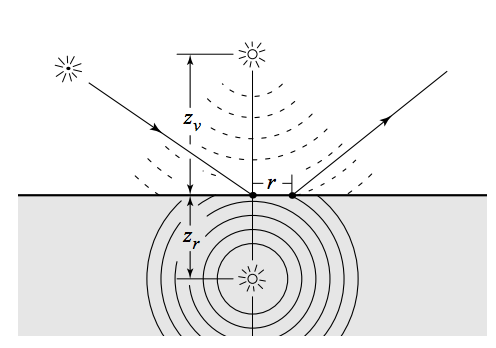

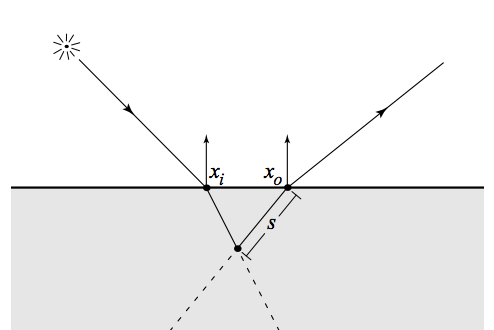

, . To approximate diffusion, an incoming ray is transformed into a dipole source with a real and virtual light source. is the Fresnel term for dielectric surfaces, is diffuse reflectance due to dipole source that decays exponentially as a function of (distance from the raytraced point).

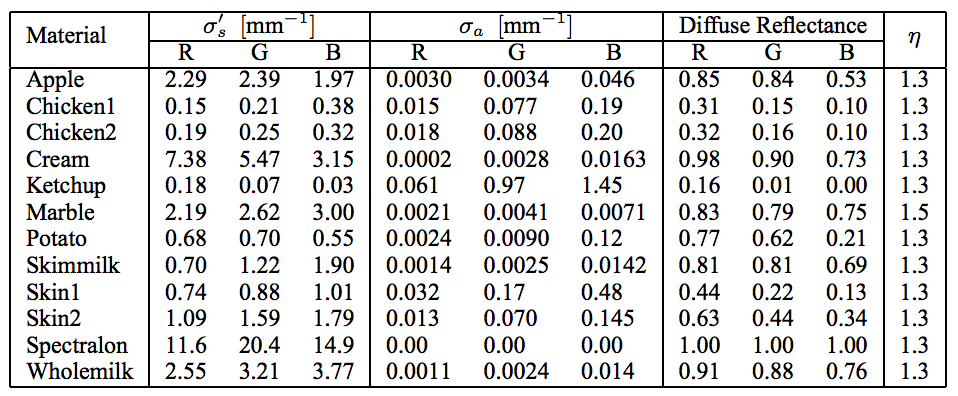

Diffuse BSSRDF term: where is the effective extinction coefficient, , are real and virtual light refractions, are real and virtual light source distances, and is the rational approximation of diffuse reflectance where is the scattering coefficient, is the extinction coefficient and is the index of refraction. These constants are all known properties of the material that must be chosen beforehand. The table of coefficients in the Jensen paper lists the coefficients for many materials.

Single Scatter term: where is the Henyey-Greenstein phase function . The functions depend on the point on entry and the point of outgoing radiance too. Single scattering occurs when the refracted incoming and outgoing rays intersect and is computed as an integral over path lengths. is the extinction coefficient and is the combined extinction coefficient. is the sampled distance along the refracted outgoing ray and .

The formulas are all fine and dandy, but you’ll notice that these formulas all depend on the initial point of intersection and a second point nearby on the surface . This is due to the fact that the luminance of translucent materials depends on that point and the nearby area surrounding it. To do that, we need to sample points along the surface in the nearby area around the intersect point.

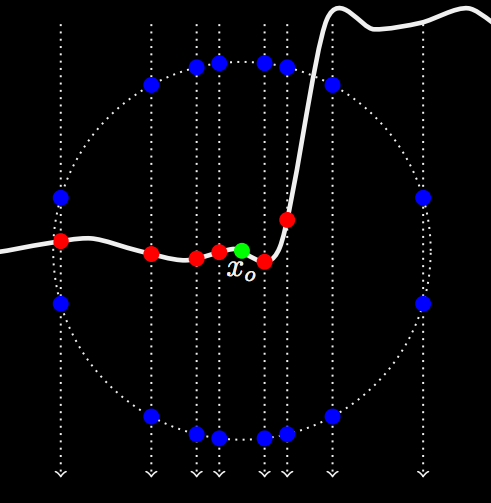

Sampling Positions

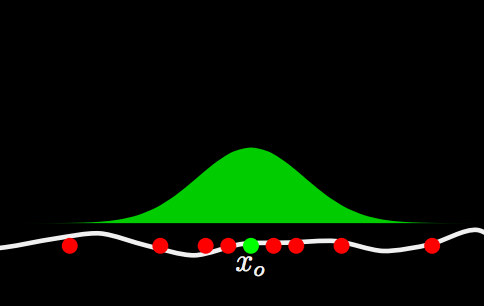

The original (Jensen 2001) paper mentioned a sampling method to generate samples in a disk around the original intersection point from the camera to the scene. This method is great for flat surfaces, but the vast majority of samples will not lie on the surface if the surface is curvy. Other sources mention augmenting this method by generating points in a disk and then shooting them in the direction of the normal vector at the original intersection point, checking for an intersection with the scene and taking those points as the points we sample.

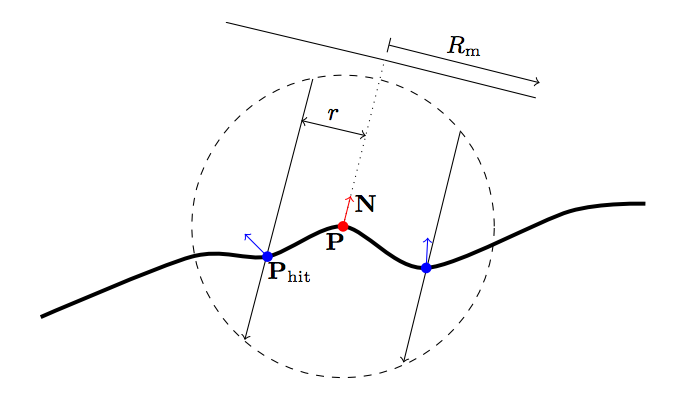

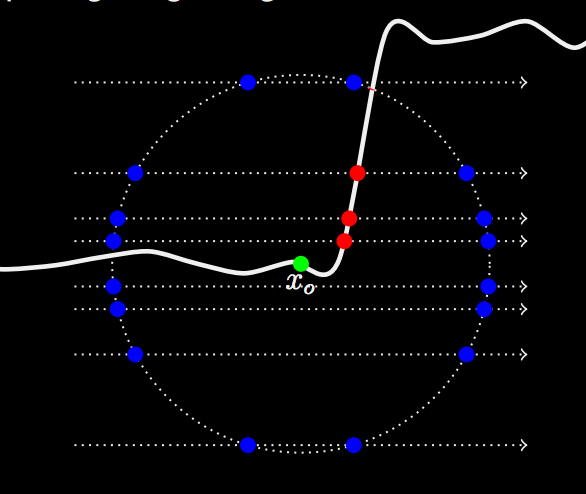

(Kulla 2013) mentioned a novel technique where instead of just sampling on a disk, we sample on three different axis (one of which is the normal vector direction at the point of intersection) within a sphere. We bound a sphere of radius and probe rays along three different axis and mark any intersection points within the sphere as the points that we draw and measure the effective radiance from.

Importance Sampling

(Kulla 2013) mentioned that the final contribution of each point is modulated by where , and is the formula for sampling random points on a disk. We also choose the axis to sample at a ratio of 2:1:1 for the normal vector direction versus the two other axis directions and also take that into account when modulating points by the pdf.

Framework Changes

We had to modify the .dae file by adding parameters, so the raytracer knew the coefficient values it needed to render the material properly. These properties were inserted XML-style into the files like:

<technique profile="CGL"> <bssrdf> .. </bssrdf> </technique>

We edited the collada file collada.cpp to be able to fetch the new data written into the .dae files:

if (type == "bssrdf") {

XMLElement *e_eta = get_element(e_bsdf, "eta");

.....

}We added a BSSRDF class to bsdf.h/bsdf.cpp to figure out which materials have that BSDF. Also added an is_bssrdf() variable to easily tell the class. If so, instead of estimating direct or indirect illumination, we call the subsurface() function in the pathtracer which adds both L for single scattering and diffusion.

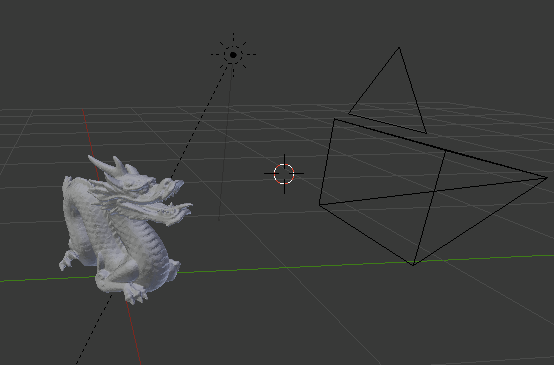

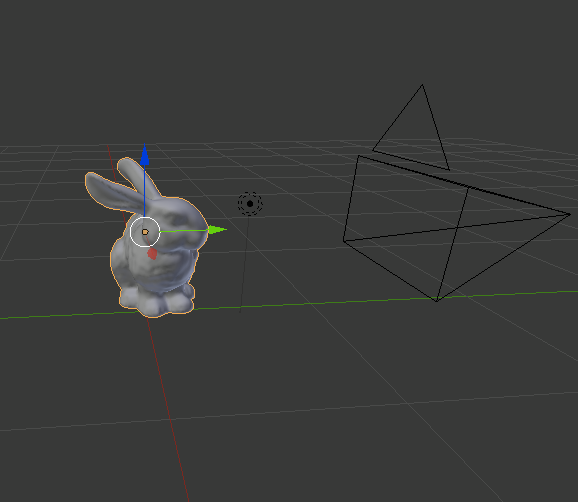

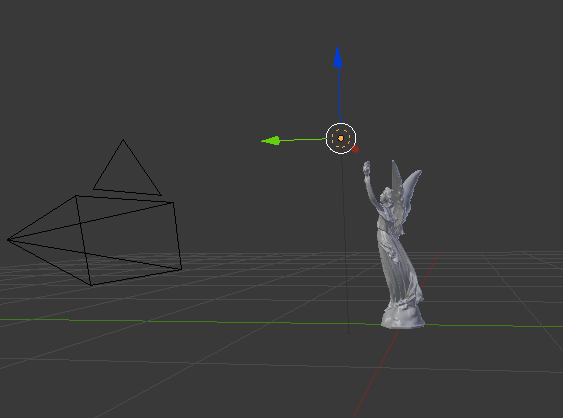

We also modified the .dae models with Blender, changing the light sources to point lights, removing the pedestal, and moving the lights to favorable positions.

Workflow

With the following methods and ideas all explained, we describe the resulting workflow of the ray tracer.

Upon an intersection of a ray traced from the camera to the scene, we call the subsurface() method which calculates the diffusion and the single-scattering terms. For it calculates all the necessary constants and Fresnel terms . It generates a ray to probe into the bounding sphere of radius and takes the intersection of the point with the same BSSRDF material and calculates based on the two locations . From the area intersection point, it sends a shadow ray to sample the amount of light on that point and then we aggregate all the terms to calculate and divide by the pdf. For , we take the traced ray and refract it within the material and choose a random distance along this refracted ray according to exponential pdf. That will be our new sampled point, which we shoot a shadow ray in the direction of the light at which it intersects with the material again and we have our new sampled point on the surface. We have enough information to evaluate the expression for , along with all the constants and parameters. With enough samples we approximate the ground truth value of the scene.

Problems

Most of the time was spent trying to figure out what to do. The (Jensen 2001) paper was not clear at all about what to do, and we had to consult many different resources that tried to explain what the formulas actually meant when implemented rather than some theoretical integral or implicit formulation from the paper.

We spent nearly a whole week with an image that didn’t look any different than the regular diffuse case besides being very dark. We later realized that the subsurface scattering model depends on the area nearby the intersection point and we were taking light samples and shooting shadow rays from the original intersection point rather than the nearby points in the area. We also had another bug with the wrong origin point when shooting the shadow ray since we were repeating code.

Since the

BSSRDFclass extended the virtualBSDFclass from the existing raytracer and we added new methods into theBSSRDFclass since we had a separate function forsubsurface()scattering we thought we had to add every additional method inBSSRDFto the main virtualBSDFclass and put them in the other classes that extendBSDFtoo. This was a lot of work, but we realized that since we can detect whether a BSDF is BSSRDF or not with theis_bssrdf()variable we included, we just left that as anifcase and casted the BSDF into BSSRDF so we could call the additional functions. We are quite proud of this clever trick.if (isect.bsdf->is_bssrdf()) {

BSSRDF const bssrdf = (BSSRDF const ) isect.bsdf;

bssrdf->bssrdf_methods();

….

}We initially had parameter issues regarding the scale of the constants (because the values listed in the table were in units of whereas the scene wasn’t) that made the material too translucent to the point that it looked like ice or glass.

Results

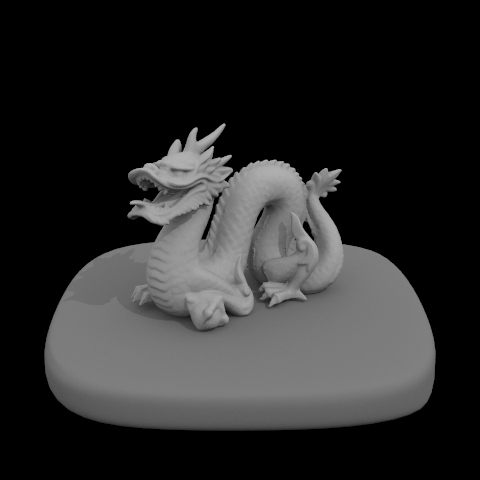

This is what the dragon looks like under regular diffuse lighting.

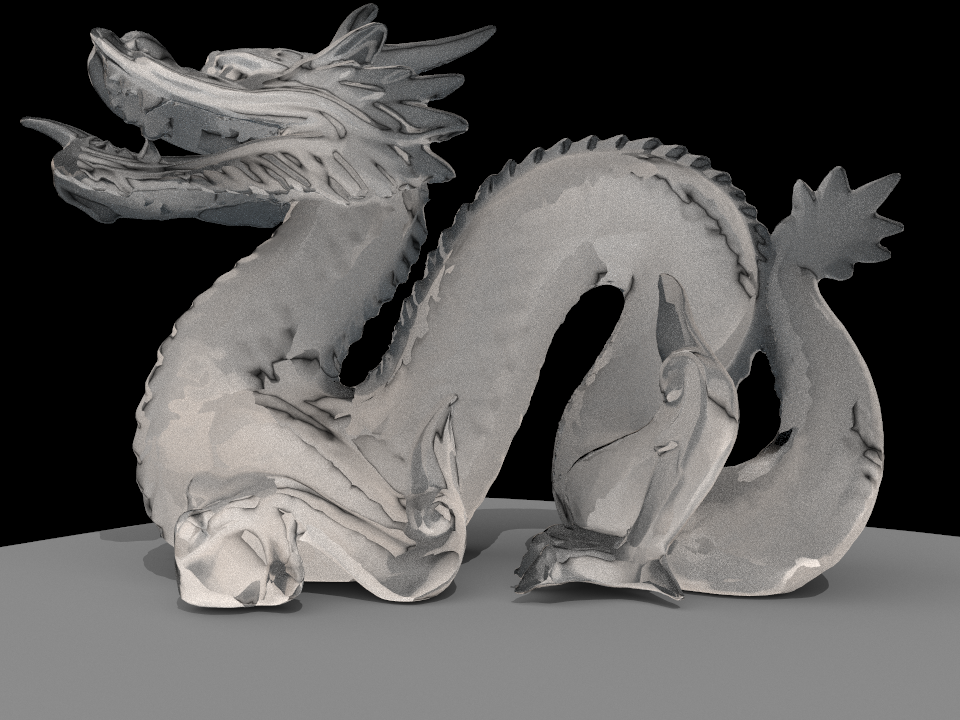

This is what the dragon looks like when we had the issue with the scale of coefficients that made the dragon look like ice or glass.

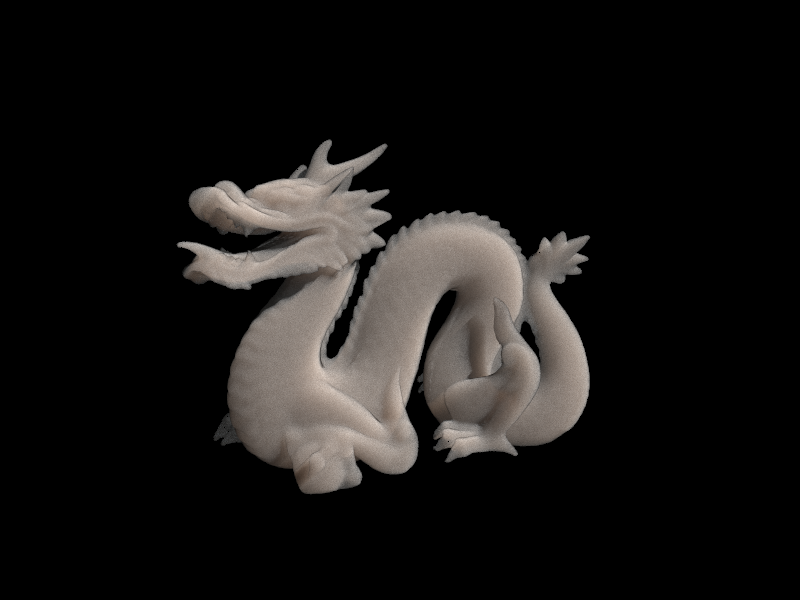

Below are the three resulting images and what the scene file looked like in Blender.

Dragon (Marble)

Bunny (Chicken)

Lucy (Marble)

References

A Practical Model for Subsurface Scattering Light Transport (Jensen 2001)

Fast BSSRDF (Jensen 2002)

BSSRDF Summary (Koutajoki 2002)

Implementing a Skin BSSRDF (Hery 2003)

BSSRDF Notes (Scukanec 2004)

BSSRDF Importance Sampling (Kulla 2013)

Approximate BSSRDF (Christensen 2015)

Contributions

Juchan: .dae file modification and integration with pathtracer. Coding.

Kai: Reference gathering and understanding the math and models. Coding.

We coded most of the project together in the same room.